Stability Regularization for Discrete Representation Learning

Abstract

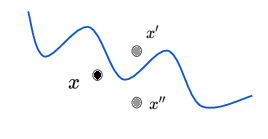

We present a method for training neural network models with discrete stochastic variables. The core of the method is stability regularization, which is a regularization procedure based on the idea of noise stability developed in Gaussian isoperimetric theory in the analysis of Gaussian functions. Stability regularization is a method to make the output of continuous functions of Gaussian random variables close to discrete, that is binary or categorical, without the need for significant manual tuning. The method can be used standalone or in combination with existing continuous relaxation methods. We validate the method in a broad range of settings, showing competitive performance against the state-of-the-art.

Type

Publication

ICLR 2022