Attention-based Multi-Context Guiding for Few-Shot Semantic Segmentation

Abstract

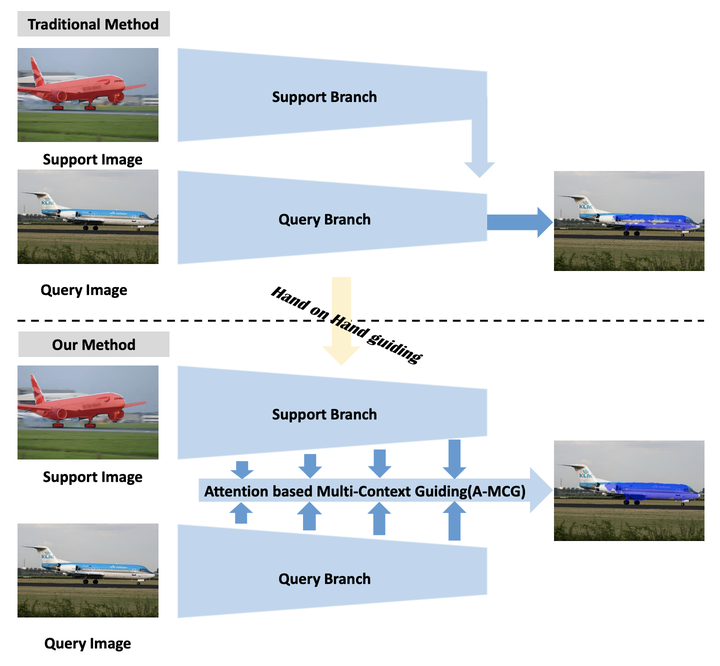

Few-shot learning is a nascent research topic, motivated by the fact that traditional deep learning methods require tremendous amounts of data. The scarcity of annotated data becomes even more challenging in semantic segmentation since pixellevel annotation in segmentation task is more labor-intensive to acquire. To tackle this issue, we propose an Attentionbased Multi-Context Guiding (A-MCG) network, which consists of three branches: the support branch, the query branch, the feature fusion branch. A key differentiator of A-MCG is the integration of multi-scale context features between support and query branches, enforcing a better guidance from the support set. In addition, we also adopt a spatial attention along the fusion branch to highlight context information from several scales, enhancing self-supervision in one-shot learning. To address the fusion problem in multi-shot learning, Conv-LSTM is adopted to collaboratively integrate the sequential support features to elevate the final accuracy. Our architecture obtains state-of-the-art on unseen classes in a variant of PASCAL VOC12 dataset and performs favorably against previous work with large gains of 1.1%, 1.4% measured in mIoU in the 1-shot and 5-shot setting.