Heterogeneous Non-Local Fusion for Multimodal Activity Recognition

Abstract

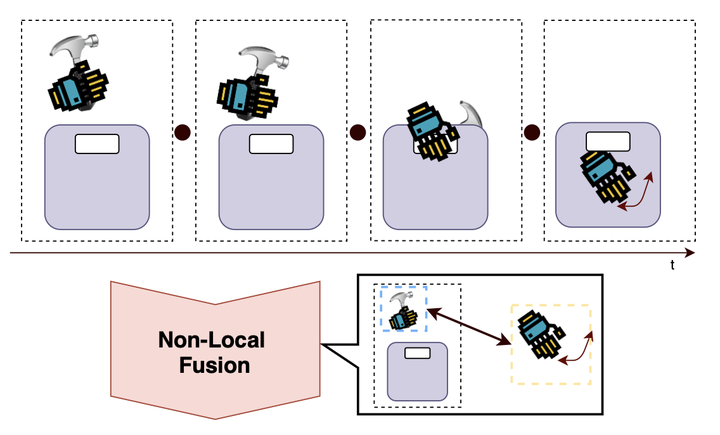

In this work, we investigate activity recognition using mul- timodal inputs from heterogeneous sensors. Activity recog- nition is commonly tackled from a single-modal perspective using videos. In case multiple signals are used, they come from the same homogeneous modality, e.g. in the case of color and optical flow. Here, we propose an activity network that fuses multimodal inputs coming from completely different and het- erogeneous sensors. We frame such a heterogeneous fusion as a non-local operation. The observation is that in a non-local operation, only the channel dimensions need to match. In the network, heterogeneous inputs are fused, while maintaining the shapes and dimensionalities that fit each input. We outline both asymmetric fusion, where one modality serves to enforce the other, and symmetric fusion variants. To further promote research into multimodal activity recognition, we introduce GloVid, a first-person activity dataset captured with video recordings and smart glove sensor readings. Experiments on GloVid show the potential of heterogeneous non-local fusion for activity recognition, outperforming individual modalities and standard fusion techniques.