NeurIPS Fest 2024

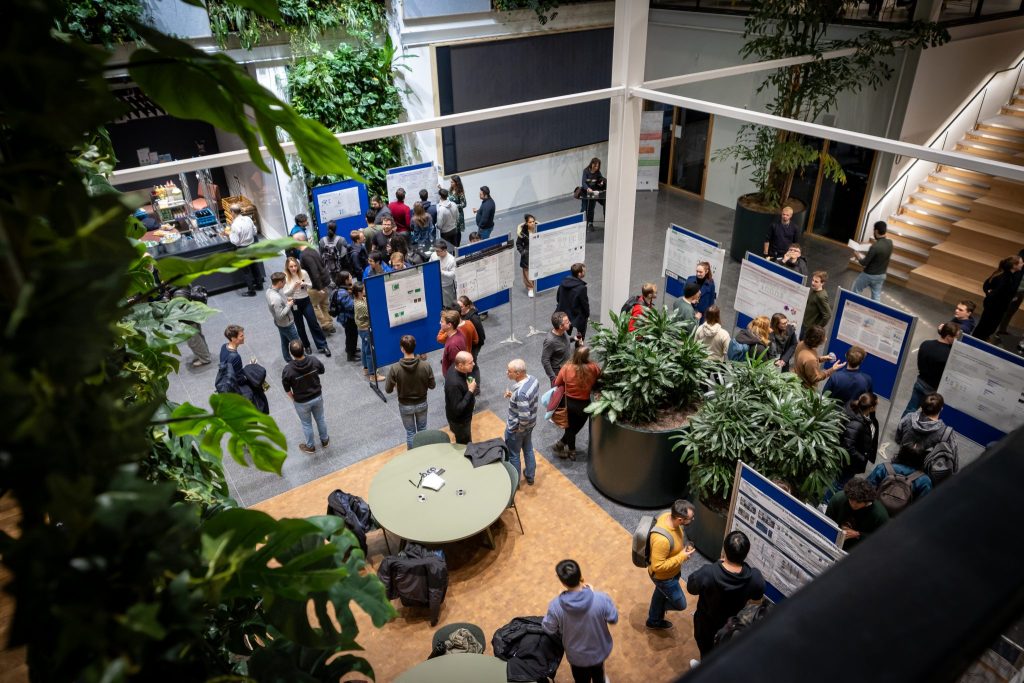

Annual NeurIPS-preview party spotlighting Amsterdam’s finest and latest research in machine learning

- Date

November 28, 2024

- Time

16:00-19:30

- Location

Lab42, Amsterdam Science Park

NeurIPS Fest 2023

NeurIPS Fest 2022

Event programme

16:00-17:00

Keynote Presentation

Christian A. Naesseth (University of Amsterdam)

Venue: L3.33 & L3.35

17:00-19:30

Poster Session

with bites & drinks

Venue: ground floor

Christian A. Naesseth

Machine Learning Assistant Professor at the University of Amsterdam

- Keynote speaker

About the keynote speaker

Christian A. Naesseth is an Assistant Professor of Machine Learning at the University of Amsterdam, a member of the Amsterdam Machine Learning Lab, the lab manager of the UvA-Bosch Delta Lab 2, and an ELLIS member.

His research interests span statistical inference, uncertainty quantification, reasoning, and machine learning, as well as their application to the sciences. He is currently working on generative modelling(diffusions, flows, AI4Science), approximate inference (variational and Monte Carlo methods), uncertainty quantification and hypothesis testing (E-values, conformal prediction). Previously, he was a postdoctoral research scientist with David Blei at the Data Science Institute, Columbia University. He completed his PhD in Electrical Engineering at Linköping University, advised by Fredrik Lindsten and Thomas Schön.

Diffusions, flows, and other stories

Generative models have taken the world by storm. Using generative modeling, a.k.a. generative AI, we can construct probabilistic approximations to any data-generating process. In the context of text, large language models place distributions over the next token, for images it is often a distribution over pixel color values, whereas for molecules it can be a combination of atom types, positions, and various chemical features. This talk will explore some of the dominant paradigms, applications, and recent developments in generative modeling.

At the 2024 NeurIPS Conference, his lab and collaborators will present 5 accepted papers.

Posters showcased at the event

Neural Flow Diffusion Models

- Grigory Bartosh

- Dmitry Vetrov

- Christian A. Naesseth

Space-Time Continuous PDE Forecasting using Equivariant Neural Fields

- David M. Knigge

- David R. Wessels

- Riccardo Valperga

- Samuele Papa

- Jan-Jakob Sonke

- Efstratios Gavves

- Erik J. Bekkers

Scalable Kernel Inverse Optimization

- Youyuan Long

- Tolga Ok

- Pedro Zattoni Scroccaro

- Peyman Mohajerin Esfahani

Rethinking Knowledge Transfer in Learning Using Privileged Information

- Danil Provodin

- Bram van den Akker

- Christina Katsimerou

- Maurits Kaptein

- Mykola Pechenizkiy

3-in-1: 2D Rotary Adaptation for Efficient Finetuning, Efficient Batching and Composability

- Baohao Liao

- Christof Monz

IPO: Interpretable Prompt Optimization for Vision-Language Models

- Yingjun Du

- Wenfang Sun

- Cees Snoek

AGALE: A Graph-Aware Continual Learning Evaluation Framework

- Tianqi Zhao

- Alan Hanjalic

- Megha Khosla

Input-to-State Stable Coupled Oscillator Networks for Closed-form Model-based Control in Latent Space

- Maximilian Stölzle

- Cosimo Della Santina

- Megha Khosla

Reproducibility Study of "Robust Fair Clustering: A Novel Fairness Attack and Defense Framework"

- Iason Skylitsis

- Zheng Feng

- Idries Nasim

- Camille Niessink

Equivariant Neural Diffusion for Molecule Generation

- François Cornet

- Grigory Bartosh

- Mikkel Schmidt

- Christian A. Naesseth

PART: Self-supervised Pre-Training with Continuous Relative Transformations

- Melika Ayoughi

VISA: Variational Inference with Sequential Sample-Average Approximations

- Heiko Zimmermann

- Christian A. Naesseth

- Jan-Willem van de Meent

[Re] On the Reproducibility of Post-Hoc Concept Bottleneck Models

- Nesta Midavaine

- Gregory Hok

- Tjoan Go

- Diego Canez

- Ioana Simion

- Satchit Chatterji

SIGMA: Sinkhorn-Guided Masked Video Modeling Main

- Mohammadreza Salehi

- Michael Dorkenwald

- Fida Mohammad Thoker

- Efstratios Gavves

- Cees G. M. Snoek

- Yuki M. Asano

Fast yet Safe: Early-Exiting with Risk Control

- Metod Jazbec*

- Alexander Timans*

- Tin Hadži Veljković

- Kaspar Sakmann

- Dan Zhang

- Christian A. Naesseth

- Eric Nalisnick

On the Reproducibility of: "Learning Perturbations to Explain Time Series Predictions"

- Wouter Bant

- Ádám Divák

- Jasper Eppink

- Floris Six Dijkstra

Rethinking Knowledge Transfer in Learning Using Privileged Information

- Danil Provodin

- Bram van den Akker

- Christina Katsimerou

- Maurits Kaptein

- Mykola Pechenizkiy

“Studying How to Efficiently and Effectively Guide Models with Explanations” - A Reproducibility Study

- Adrian Sauter

- Milan Miletic

- Ryan Ott

- Rohith Saai Pemmasani Prabakara

Variational Flow Matching for Graph Generation

- Floor Eijkelboom

- Grigory Bartosh

- Christian A. Naesseth

- Max Welling

- Jan-Willem van de Meent

When Your AIs Deceive You: Challenges of Partial Observability in Reinforcement Learning from Human Feedback

- Leon Lang

- Davis Foote

- Stuart Russell

- Anca Dragan

- Erik Jenner

- Scott Emmons

FewViewGS: Gaussian Splatting with Few View Matching and Multi-stage Training

- Ruihong Yin

- Vladimir Yugay

- Yue Li

- Sezer Karaoglu

- Theo Gevers

TVBench: Redesigning Video-Language Evaluation

- Daniel Cores

- Michael Dorkenwald

- Manuel Mucientes

- Cees G. M. Snoek

- Yuki M. Asano

GO4Align: Group Optimization for Multi-Task Alignment

- Jiayi Shen

- Qi (Cheems) Wang

- Zehao Xiao

- Nanne Van Noord

- Marcel Worring

No Train, all Gain: Self-Supervised Gradients Improve Deep Frozen Representations

- Walter Simoncini

- Andrei Bursuc

- Spyros Gidaris

- Yuki M. Asano

Optimizing importance weighting in the presence of sub-population shifts

- Floris Holstege

- Bram Wouters

- Noud van Giersbergen

- Cees Diks

Reproducibility Study of Learning Fair Graph Representations Via Automated Data Augmentations

- Thijmen Nijdam

- Juell Sprott

- Taiki Papandreou-Lazos

- Jurgen de Heus

The NeurIPS Fest 2024 is ELLIS unit Amsterdam’s annual NeurIPS-preview party spotlighting Amsterdam’s finest and latest research in machine learning.