Dynamic Prototype Convolution Network for Few-shot Semantic Segmentation

Abstract

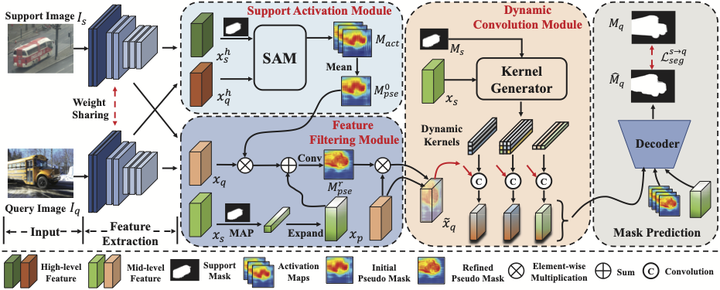

The key challenge for few-shot semantic segmentation (FSS) is how to tailor a desirable interaction among sup- port and query features and/or their prototypes, under the episodic training scenario. Most existing FSS methods im- plement such support/query interactions by solely leverag- ing plain operations – e.g., cosine similarity and feature concatenation – for segmenting the query objects. How- ever, these interaction approaches usually cannot well cap- ture the intrinsic object details in the query images that are widely encountered in FSS, e.g., if the query object to be segmented has holes and slots, inaccurate segmentation al- most always happens. To this end, we propose a dynamic prototype convolution network (DPCN) to fully capture the aforementioned intrinsic details for accurate FSS. Specifi- cally, in DPCN, a dynamic convolution module (DCM) is firstly proposed to generate dynamic kernels from support foreground, then information interaction is achieved by con- volution operations over query features using these kernels. Moreover, we equip DPCN with a support activation mod- ule (SAM) and a feature filtering module (FFM) to generate pseudo mask and filter out background information for the query images, respectively. SAM and FFM together can mine enriched context information from the query features. Our DPCN is also flexible and efficient under the k-shot FSS setting. Extensive experiments on PASCAL-5i and COCO- 20i show that DPCN yields superior performances under both 1-shot and 5-shot settings.