Modeling the temporal dynamics of neural responses in human visual cortex

Abstract

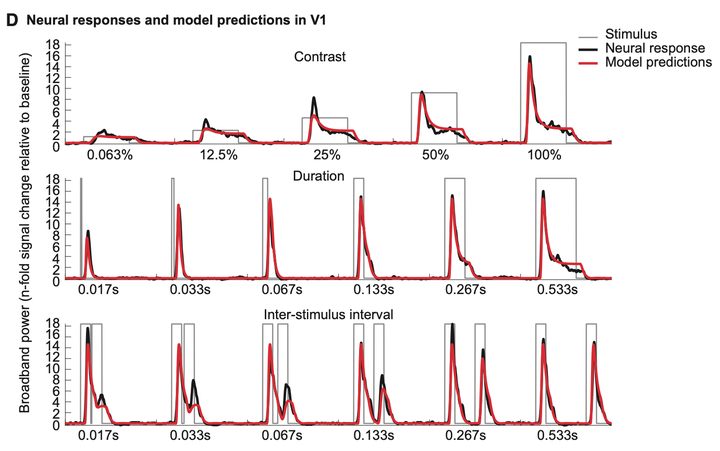

Cortical responses to visual stimuli exhibit complex temporal dynamics, including sub-additive temporal summation, response reduction with repeated or sustained stimuli (adaptation), and slower dynamics at low contrast. Multiple computational models have been proposed to account for these dynamics in several measurement domains, including single-cell recordings, psychophysics, and fMRI. It is challenging to compare these models because there are differences in model form, test stimuli, and instrument. Here we present a new dataset that is well-suited to compare models of neural temporal dynamics. The dataset is from electrocorticographic (ECoG) recordings of human visual cortex, which measures cortical neural population responses with high spatial and temporal precision. The stimuli were large, static contrast patterns and varied systematically in contrast, duration, and inter-stimulus interval (ISI). Time-varying broadband responses were computed using the power envelope of the band-pass filtered voltage time course (50-170 Hz) recorded from a total of 126 electrodes in ten epilepsy patients, covering earlier (V1-V4) and higher-order (LO, TO, IPS) retinotopic maps. In all visual regions, the ECoG broadband responses show several non-linear features: peak response amplitude saturates with high contrast and long stimulus durations; response latency decreases with increasing contrast; and the response to a second stimulus is suppressed for short ISIs and recovers for longer ISIs. These features were well predicted by a computational model (Zhou, Benson, Kay and Winawer, 2019) comprised of a small set of canonical neuronal operations: linear filtering, rectification, exponentiation, and a delayed divisive gain control. These results demonstrate that a simple computational model comprised of canonical neuronal computations captures a wide range of temporal and contrast-dependent neuronal dynamics at millisecond resolution. Finally, we present a software repository that implements models of temporal dynamics in a modular fashion, enabling the comparison of many models fit to the same data and analyzed with the same methods.