Memory Attention Networks for Skeleton-Based Action Recognition

Abstract

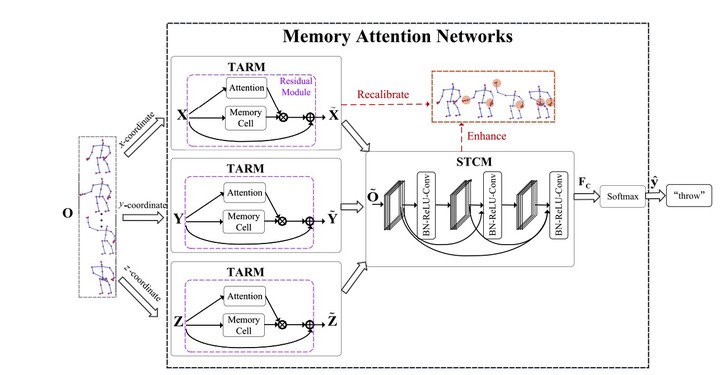

Skeleton-based action recognition has been extensively studied, but it remains an unsolved problem because of the complex variations of skeleton joints in 3-D spatiotemporal space. To handle this issue, we propose a newly temporal-then-spatial recalibration method named memory attention networks (MANs) and deploy MANs using the temporal attention recalibration module (TARM) and spatiotemporal convolution module (STCM). In the TARM, a novel temporal attention mechanism is built based on residual learning to recalibrate frames of skeleton data temporally. In the STCM, the recalibrated sequence is transformed or encoded as the input of CNNs to further model the spatiotemporal information of skeleton sequence. Based on MANs, a new collaborative memory fusion module (CMFM) is proposed to further improve the efficiency, leading to the collaborative MANs (C-MANs), trained with two streams of base MANs. TARM, STCM, and CMFM form a single network seamlessly and enable the whole network to be trained in an end-to-end fashion. Comparing with the state-of-the-art methods, MANs and C-MANs improve the performance significantly and achieve the best results on six data sets for action recognition. The source code has been made publicly available at https://github.com/memory-attention-networks.