PIE-Net: Photometric Invariant Edge Guided Network

for Intrinsic Image Decomposition

Abstract

Intrinsic image decomposition is the process of recovering the image formation components (reflectance and shading) from an image. Previous methods employ either explicit priors to constrain the problem or implicit constraints as formulated by their losses (deep learning). These methods can be negatively influenced by strong illumination conditions causing shading-reflectance leakages.

Therefore, in this paper, an end-to-end edge-driven hybrid CNN approach is proposed for intrinsic image decomposition. Edges correspond to illumination invariant gradients. To handle hard negative illumination transitions, a hierarchical approach is taken including global and local refinement layers. We make use of attention layers to further strengthen the learning process.

An extensive ablation study and large scale experiments are conducted showing that it is beneficial for edge-driven hybrid IID networks to make use of illumination invariant descriptors and that separating global and local cues helps in improving the performance of the network. Finally, it is shown that the proposed method obtains state of the art performance and is able to generalise well to real world images.

Our model exploits illumination invariant features in an edge-driven hybrid CNN approach. The model is able to predict physically consistent reflectance and shading from a single input image, without the need for any specific priors. The network is trained without any specialised dataset or losses.

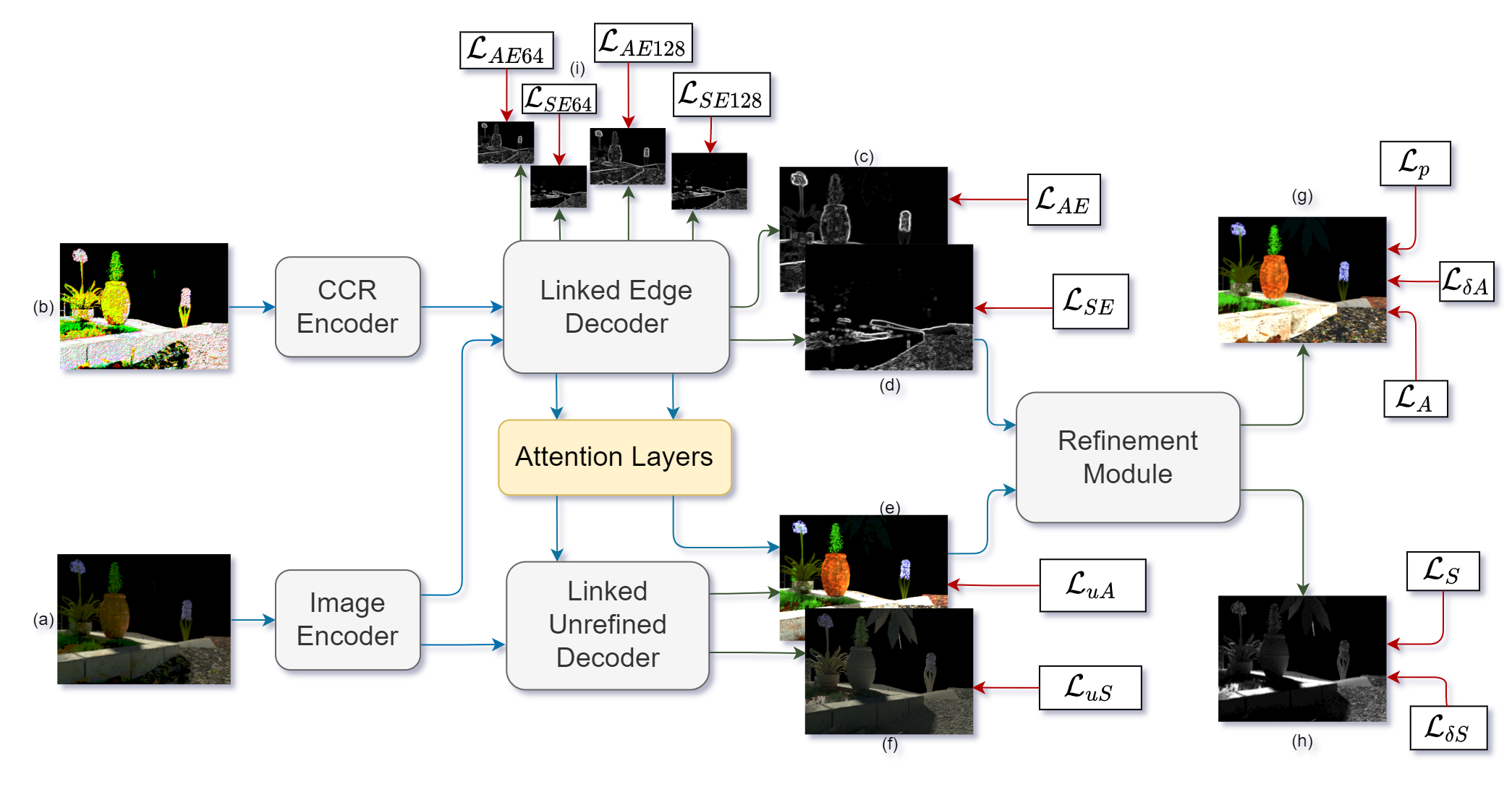

Overview of the proposed Network. The architecture consists of 4 sub-modules. Inputs to the network are (a) RGB image and (b) the CCR image. The CCR image is computed from (a). The outputs of the networks are: (c) reflectance edge, (d) shading edge, (e) the unrefined reflectance prediction, (f) the unrefined shading prediction, (g) final refined reflectance, (h) the final refined shading, and (i) scaled edge outputs @(64, 128).

Results

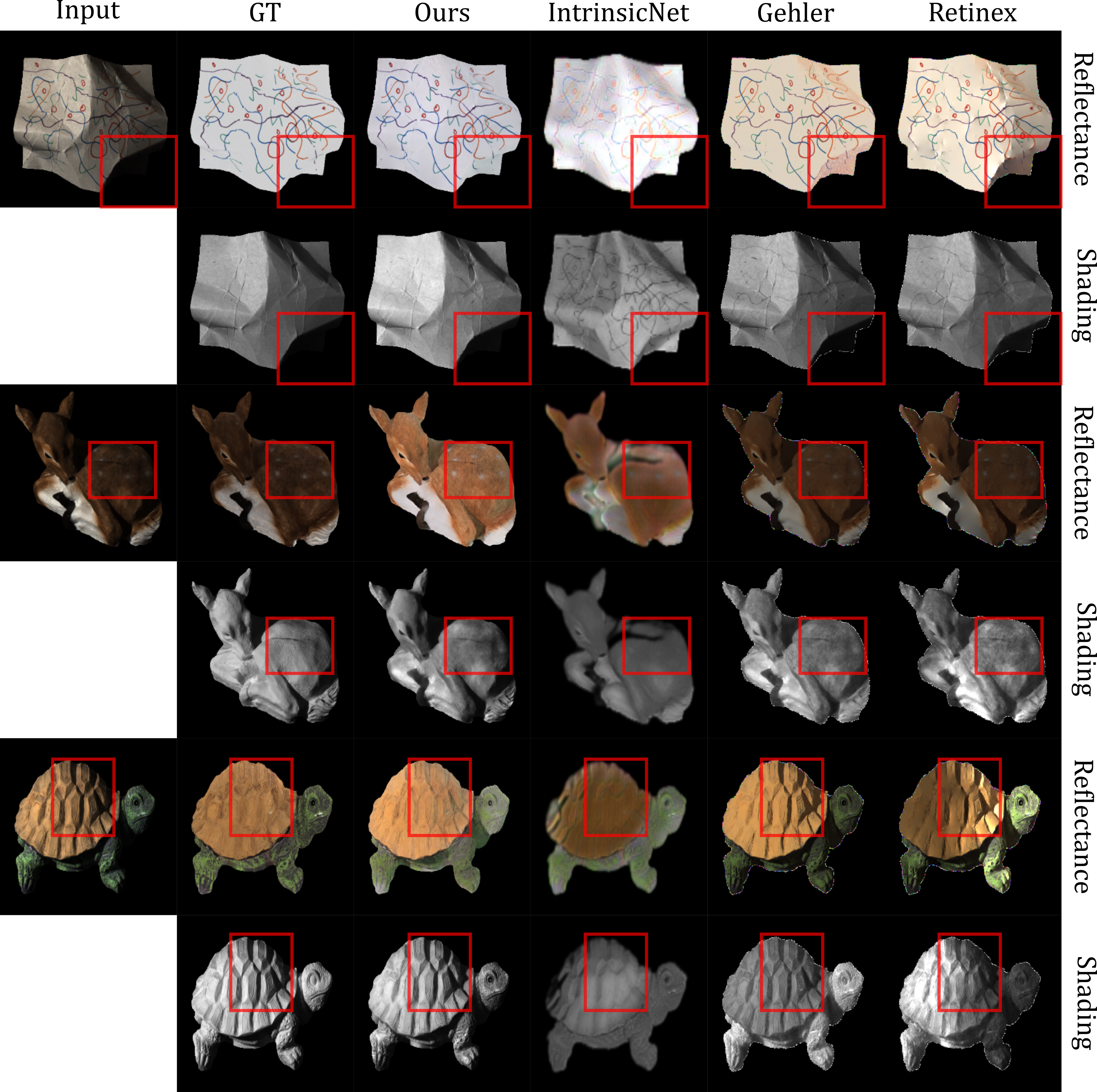

Visuals from the MIT Intrinsic test set. It is shown that the proposed algorithm predictions are closer to the ground truth IID components. IntrinsicNet, on the other hand, completely misses the shadow on the paper, while the proposed algorithm can transfer it to the shading image correctly. The deer and turtle show the proposed algorithm able to properly disentangle geometric patterns from reflectance, which are much flatter.

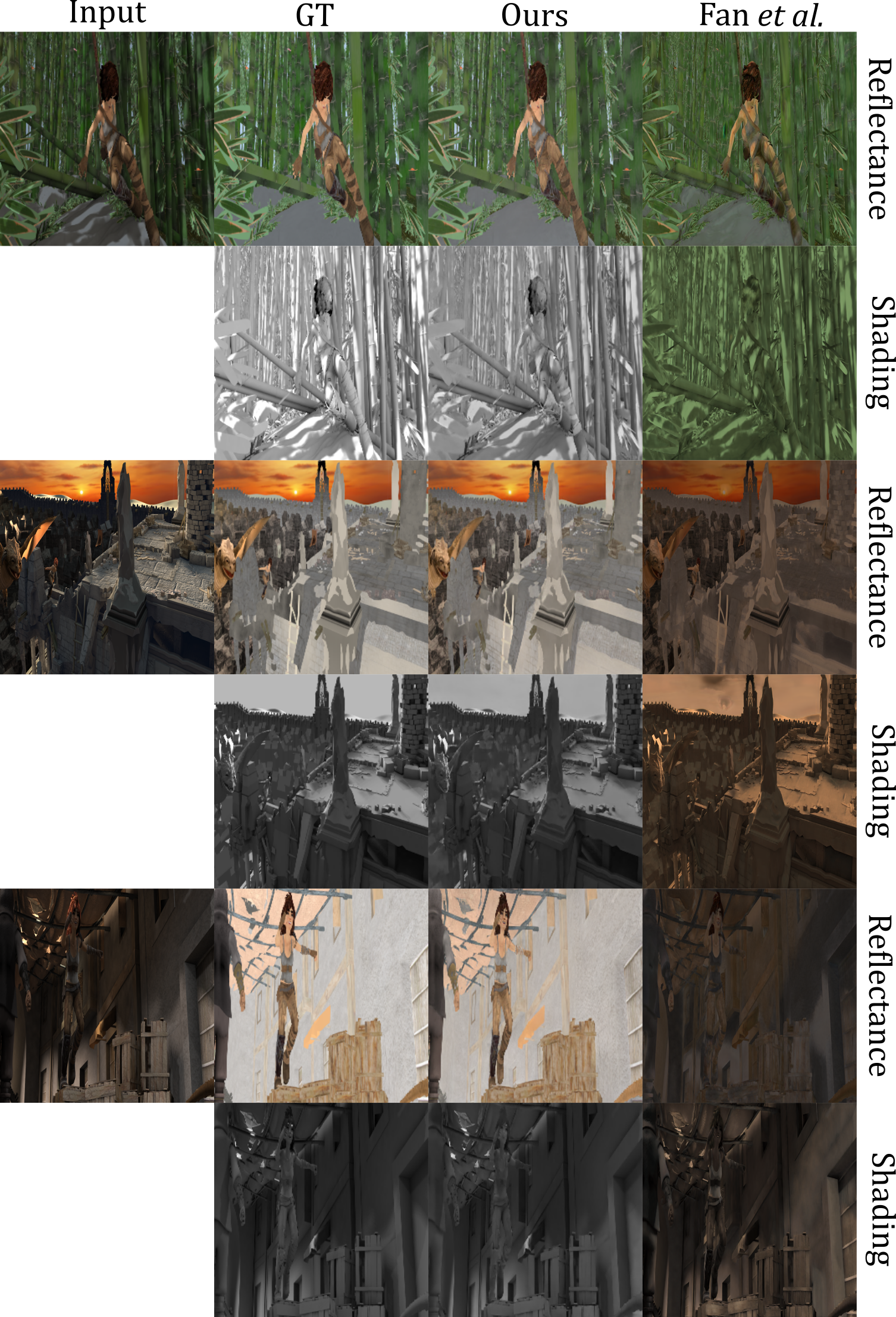

Results on the Sintel test set. It is shown that our network can remove the cast shadows, even from complex scenes like a forest. The outputs show that the reflectance is free from the cast shadows on the wall, while the shading image is free from the textures on the wooden box.

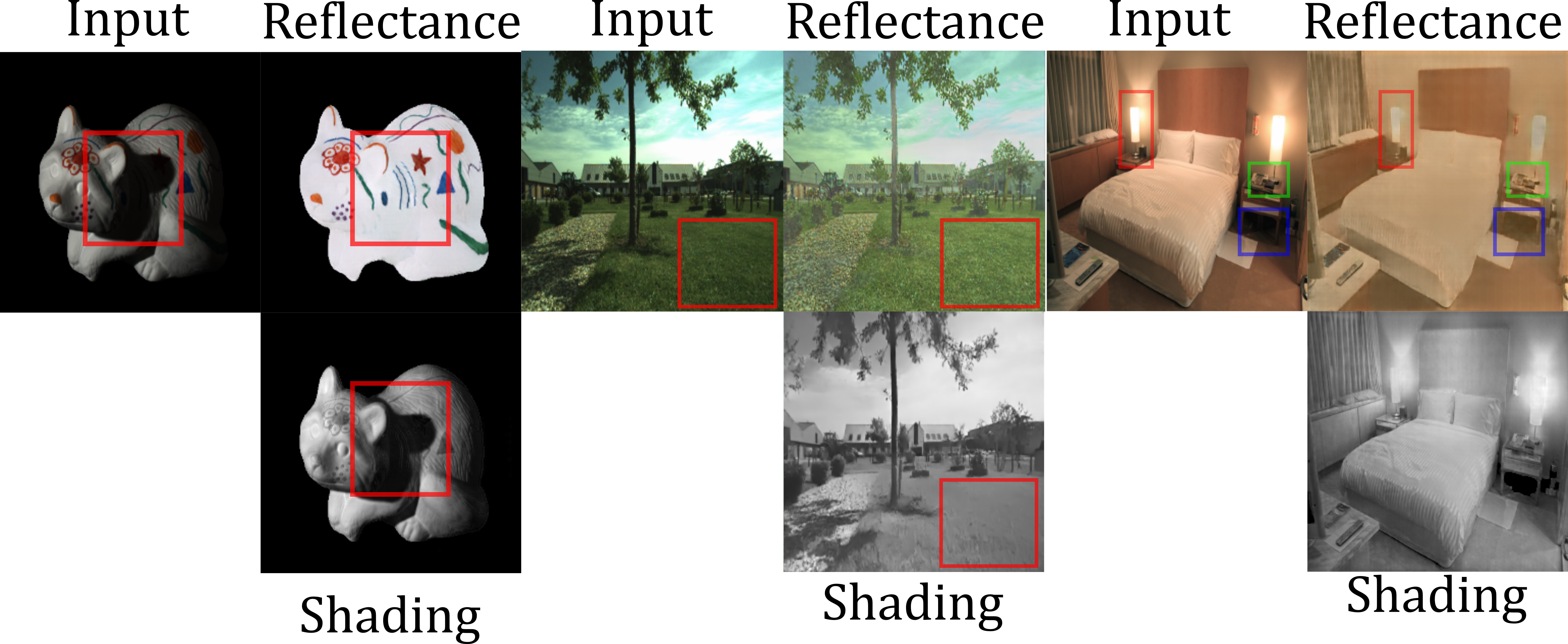

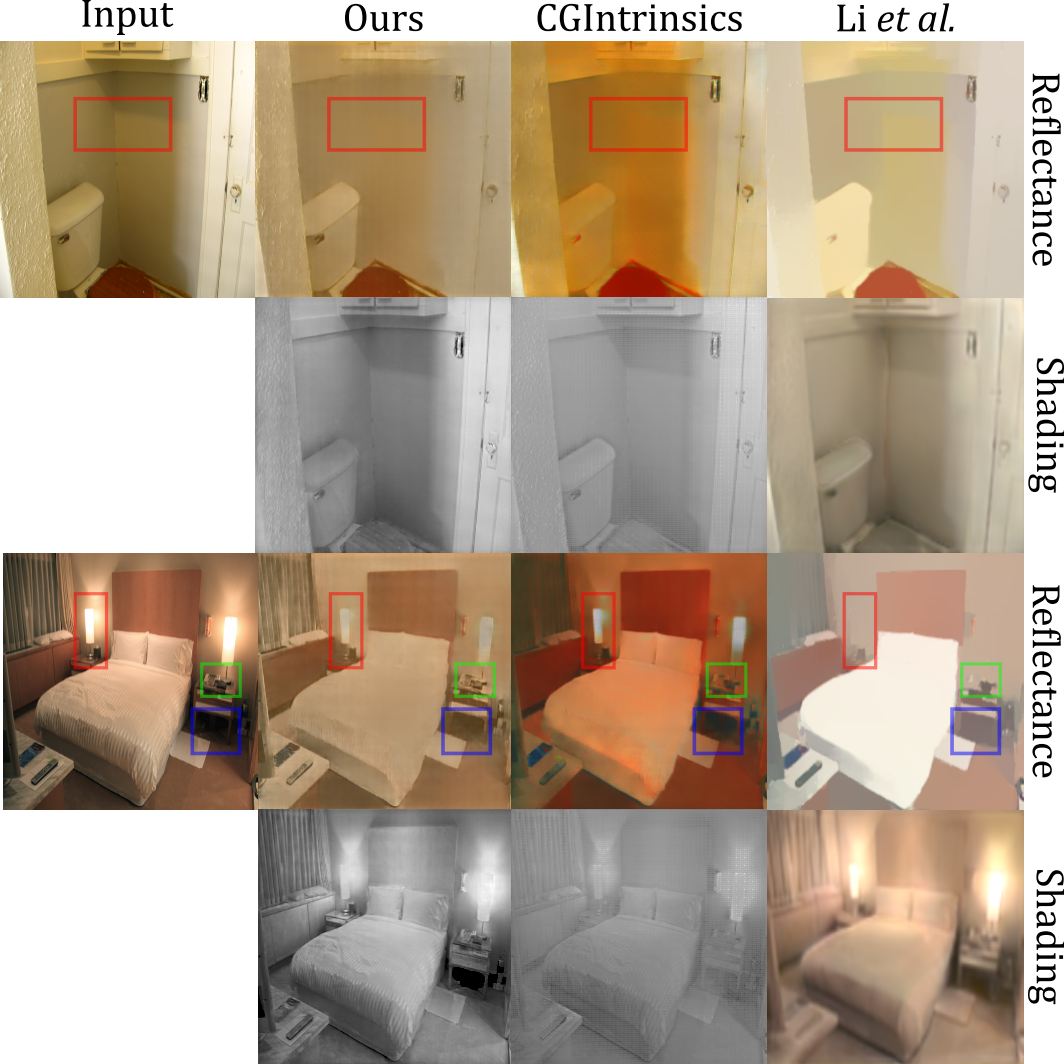

Results of the proposed method on the In the Wild dataset. Despite being trained primarily on outdoor garden images. The proposed method obtains the reflectance that is free of hard negative illumination transitions. In the second example, the bedside light (red box) is well preserved and assigned to the shading component. The small objects (green box) on the bedside table are more distinctive compared to other methods, while additional details underneath the table (blue box) are assigned to the reflectance component.

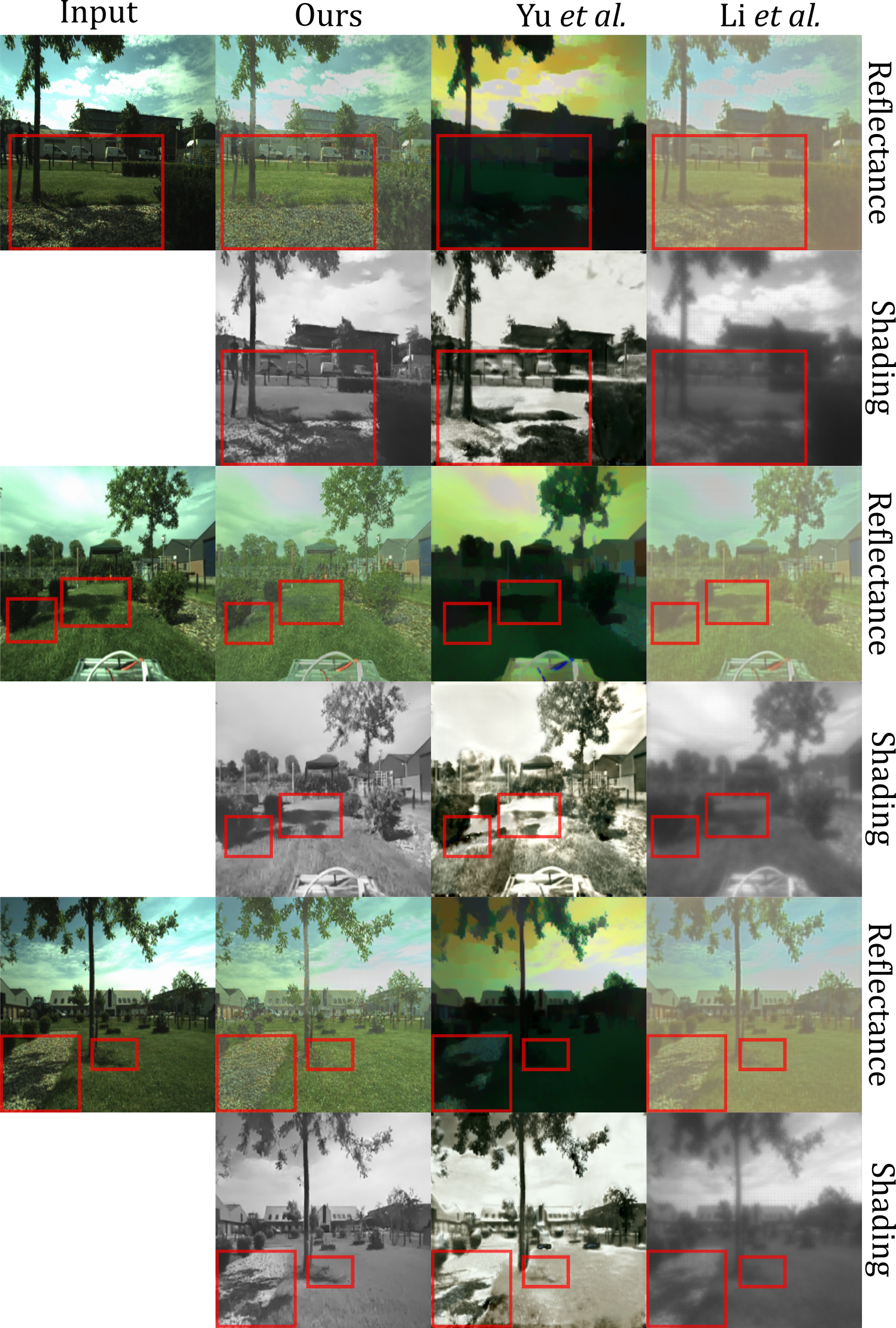

Visuals of the proposed method on the Trimbot dataset. The proposed method is trained and finetuned on a fully synthetic dataset, yet it can recover proper reflectance by removing both soft and hard illumination patterns, while obtaining a smooth shading that is free from shadow-reflectance misclassifications.

Citation

@inproceedings{dasPIENet,

title = {PIE-Net: Photometric Invariant Edge Guided Network for Intrinsic Image Decomposition},

author = {Partha Das and Sezer Karaoglu and Theo Gevers},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition, (CVPR)},

year = {2022}

}