Shuffled ImageNet-Banks for Video Event Detection and Search

Abstract

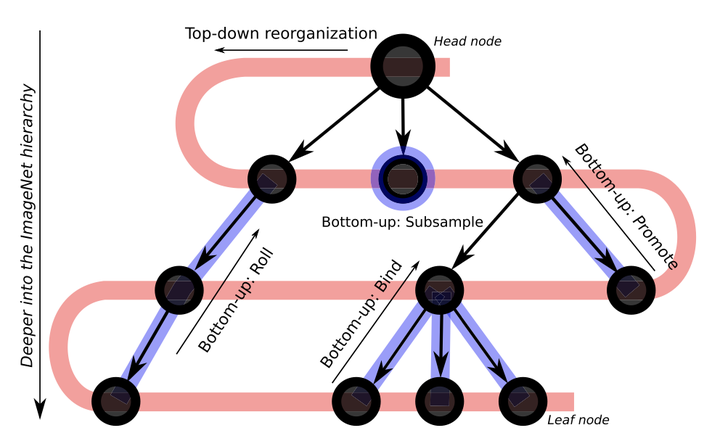

This article aims for the detection and search of events in videos, where video examples are either scarce or even absent during training. To enable such event detection and search, ImageNet concept banks have shown to be effective. Rather than employing the standard concept bank of 1,000 ImageNet classes, we leverage the full 21,841-class dataset. We identify two problems with using the full dataset: (i) there is an imbalance between the number of examples per concept, and (ii) not all concepts are equally relevant for events. In this article, we propose to balance large-scale image hierarchies for pre-training. We shuffle concepts based on bottom-up and top-down operations to overcome the problems of example imbalance and concept relevance. Using this strategy, we arrive at the shuffled ImageNet bank, a concept bank with an order of magnitude more concepts compared to standard ImageNet banks. Compared to standard ImageNet pre-training, our shuffles result in more discriminative representations to train event models from the limited video event examples. For event search, the broad range of concepts enable a closer match between textual queries of events and concept detections in videos. Experimentally, we show the benefit of the proposed bank for event detection and event search, with state-of-the-art performance for both tasks on the challenging TRECVID Multimedia Event Detection and Ad-Hoc Video Search benchmarks.