| The Influence of Cross-Validation on Video Classification Performance In ACM International Conference on Multimedia 2006. [bibtex] [pdf] [url] |

Abstract

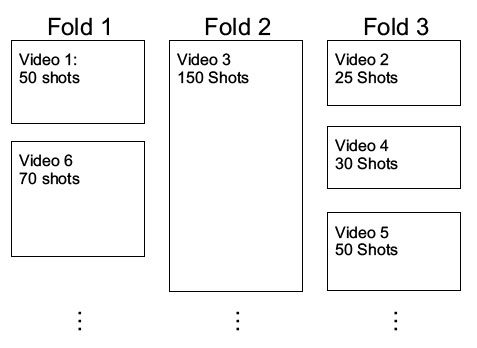

Digital video is sequential in nature. When video data is used in a semantic concept classification task, the episodes are usually summarized with shots. The shots are annotated as containing, or not containing, a certain concept resulting in a labeled dataset. These labeled shots can subsequently be used by supervised learning methods (classifiers) where they are trained to predict the absence or presence of the concept in unseen shots and episodes. The performance of such automatic classification systems is usually estimated with cross-validation. By taking random samples from the dataset for training and testing as such, part of the shots from an episode are in the training set and another part from the same episode is in the test set. Accordingly, data dependence between training and test set is introduced, resulting in too optimistic performance estimates. In this paper, we experimentally show this bias, and propose how this bias can be prevented using “episode-constrained” cross-validation. Moreover, we show that a 15% higher classifier performance can be achieved by using episode constrained cross-validation for classifier parameter tuning.

Bibtex Entry

@InProceedings{vanGemertICM2006,

author = "van Gemert, J. C. and Snoek, C. G. M. and Veenman, C. J. and Smeulders, A. W. M.",

title = "The Influence of Cross-Validation on Video Classification Performance",

booktitle = "ACM International Conference on Multimedia",

pages = "695--698",

year = "2006",

url = "https://ivi.fnwi.uva.nl/isis/publications/2006/vanGemertICM2006",

pdf = "https://ivi.fnwi.uva.nl/isis/publications/2006/vanGemertICM2006/vanGemertICM2006.pdf",

has_image = 1

}