| Color Constancy Using 3D Stage Geometry In IEEE International Conference on Computer Vision 2009. [bibtex] [pdf] [url] |

Abstract

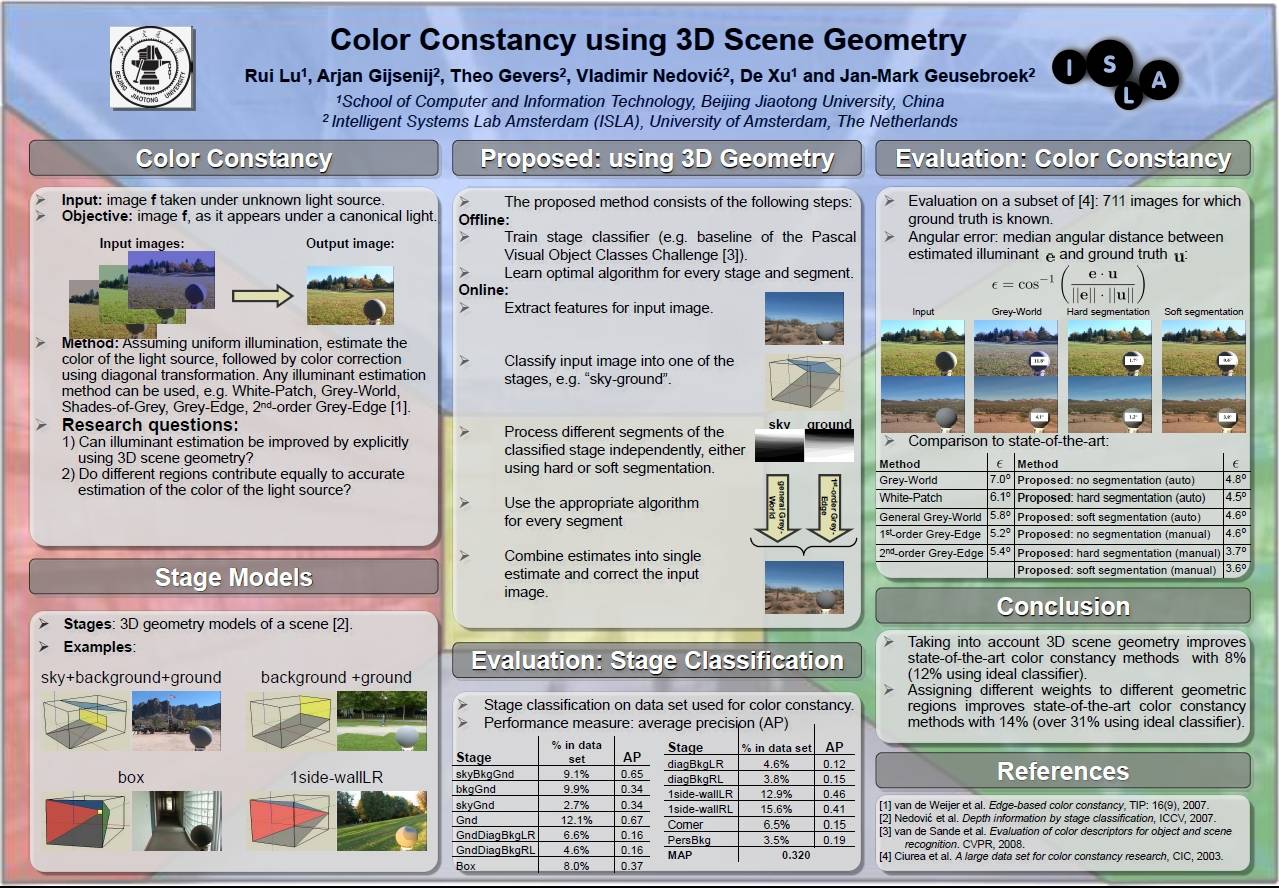

The aim of color constancy is to remove the effect of the

color of the light source. As color constancy is inherently

an ill-posed problem, most of the existing color constancy

algorithms are based on specific imaging assumptions such

as the grey-world and white patch assumptions.

In this paper, 3D geometry models are used to determine

which color constancy method to use for the different geometrical regions found in images. To this end, images are

first classified into stages (rough 3D geometry models). According to the stage models, images are divided into different regions using hard and soft segmentation. After that, the best color constancy algorithm is selected for each geometry segment. As a result, light source estimation is tuned to the global scene geometry. Our algorithm opens the possibility to estimate the remote scene illumination color, by distinguishing nearby light source from distant illuminants.

Experiments on large scale image datasets show that

the proposed algorithm outperforms state-of-the-art single

color constancy algorithms with an improvement of almost

14% of median angular error. When using an ideal classifier (i.e, all of the test images are correctly classified into

stages), the performance of the proposed method achieves

an improvement of 31% of median angular error compared

to the best-performing single color constancy algorithm.

Info

You can find a list of the images that we used in the paper here.Bibtex Entry

@InProceedings{LuICCV2009,

author = "Lu, R. and Gijsenij, A. and Gevers, T. and Xu, D.

and Nedovic, V. and Geusebroek, J. M.",

title = "Color Constancy Using 3D Stage Geometry",

booktitle = "IEEE International Conference on Computer Vision",

year = "2009",

url = "https://ivi.fnwi.uva.nl/isis/publications/2009/LuICCV2009",

pdf = "https://ivi.fnwi.uva.nl/isis/publications/2009/LuICCV2009/LuICCV2009.pdf",

has_image = 1

}