| Unsupervised Multi-Feature Tag Relevance Learning for Social Image Retrieval In ACM International Conference on Image and Video Retrieval 2010. [bibtex] [pdf] [url] |

Abstract

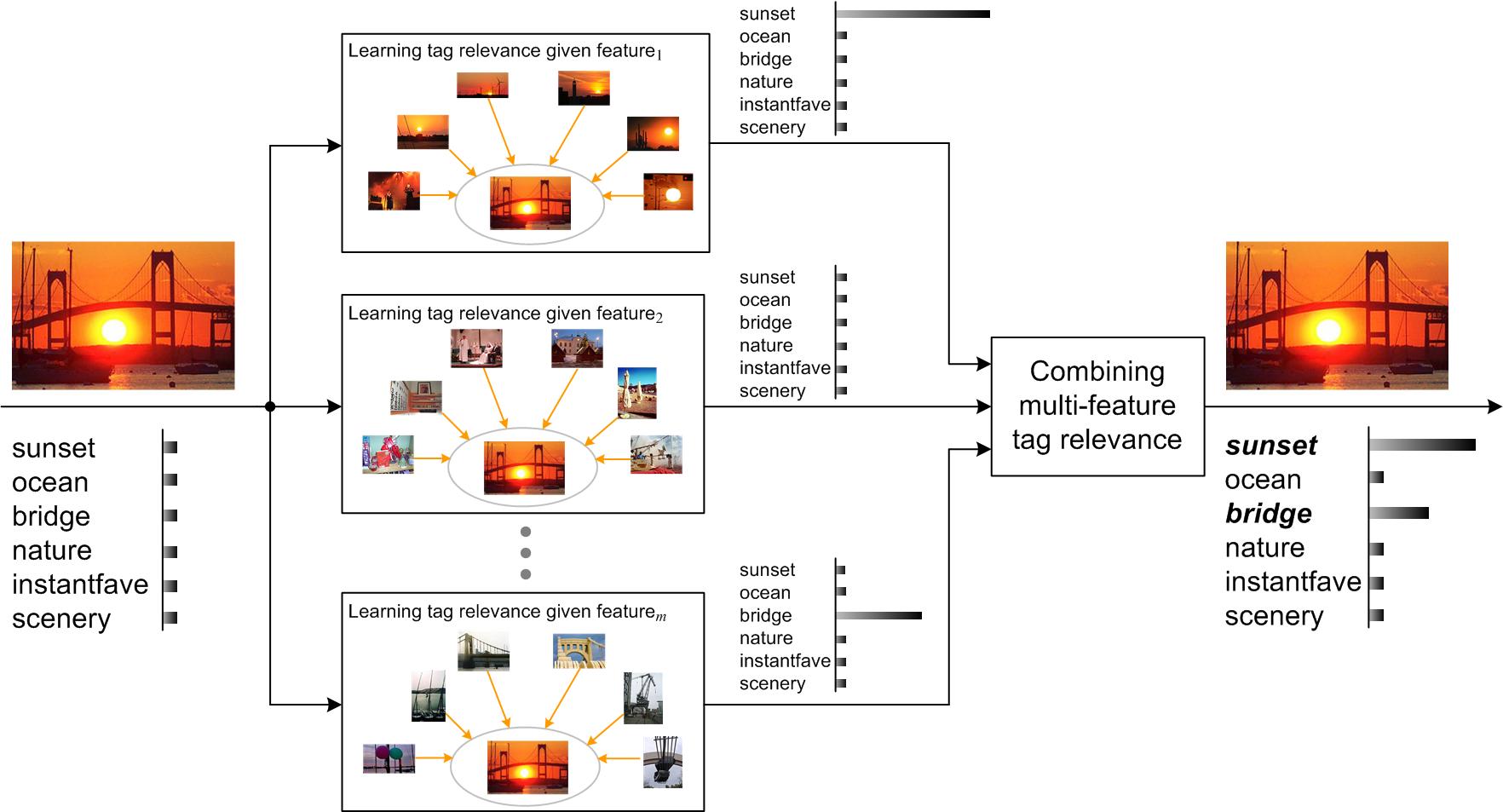

Interpreting the relevance of a user-contributed tag with respect to the visual content of an image is an emerging problem in social image retrieval. In the literature this problem is tackled by analyzing the correlation between tags and images represented by specific visual features. Unfortunately, no single feature represents the visual content completely, e.g., global features are suitable for capturing the gist of scenes, while local features are better for depicting objects. To solve the problem of learning tag relevance given multiple features, we introduce in this paper two simple and effective methods: one is based on the classical Borda Count and the other is a method we name UniformTagger. Both methods combine the output of many tag relevance learners driven by diverse features in an unsupervised, rather than supervised, manner. Experiments on 3.5 million social-tagged images and two test sets verify our proposal. Using learned tag relevance as updated tag frequency for social image retrieval, both Borda Count and UniformTagger outperform retrieval without tag relevance learning and retrieval with single-feature tag relevance learning. Moreover, the two unsupervised methods are comparable to a state-of-the-art supervised alternative, but without the need of any training data.

Info

Best paper award.Bibtex Entry

@InProceedings{LiICIVR2010,

author = "Li, X. and Snoek, C. G. M. and Worring, M.",

title = "Unsupervised Multi-Feature Tag Relevance Learning for Social Image Retrieval",

booktitle = "ACM International Conference on Image and Video Retrieval",

year = "2010",

url = "https://ivi.fnwi.uva.nl/isis/publications/2010/LiICIVR2010",

pdf = "https://ivi.fnwi.uva.nl/isis/publications/2010/LiICIVR2010/LiICIVR2010.pdf",

has_image = 1

}