| Sonify Your Face: Facial Expressions for Sound Generation In ACM International Conference on Multimedia 2010. [bibtex] [pdf] [url] |

Abstract

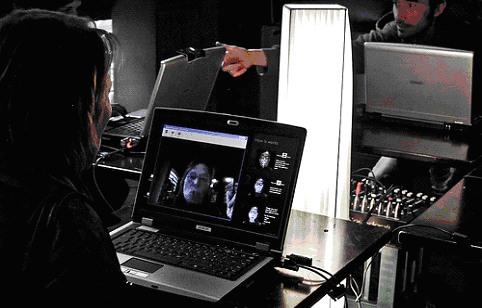

We present a novel visual creativity tool that automatically recognizes facial expressions and tracks facial muscle movements in real time to produce sounds. The facial expression recognition module detects and tracks a face and outputs a feature vector of motions of specific locations in the face. The feature vector is used as input to a Bayesian network which classifies facial expressions into several categories (e.g., angry, disgusted, happy, etc.). The classification results are used along with the feature vector to generate a combination of sounds that change in real time depending on the personís facial expressions. We explain the artistic motivation behind the work, the basic components of our tool, and possible applications in the arts (performance, installation) and in the medical domain. Finally, we report on the experience of approximately 25 users of our system at a conference demonstration session, of 9 participants in a pilot study to assess the systemís usability, and discuss our experience installing the work at an important digital arts festival (RE-NEW 2009).

Bibtex Entry

@InProceedings{ValentiICM2010,

author = "Valenti, R. and Jaimes, A. and Sebe, N.",

title = "Sonify Your Face: Facial Expressions for Sound Generation",

booktitle = "ACM International Conference on Multimedia",

year = "2010",

url = "https://ivi.fnwi.uva.nl/isis/publications/2010/ValentiICM2010",

pdf = "https://ivi.fnwi.uva.nl/isis/publications/2010/ValentiICM2010/ValentiICM2010.pdf",

has_image = 1

}