| Quadratic Boosting In Pattern Recognition 2008. [bibtex] [pdf] [url] |

Abstract

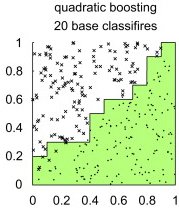

This paper presents a strategy to improve the AdaBoost algorithm with a quadratic combination of base classifiers. We observe that learning this combination is necessary to get better performance and is possible by constructing an intermediate learner operating on the combined linear and quadratic terms. This is not trivial, as the parameters of the base classifiers are not under direct control, obstructing the application of direct optimization. We propose a new method realizing iterative optimization indirectly. First we train a classifier by randomizing the labels of training examples. Subsequently, the input learner is called repeatedly with a systematic update of the labels of the training examples in each round. We show that the quadratic boosting algorithm converges under the condition that the given base learner minimizes the empirical error. We also give an upper bound on the VC-dimension of the new classifier. Our experimental results on 23 standard problems show that quadratic boosting compares favorably with AdaBoost on large data sets at the cost of training speed. The classification time of the two algorithms, however, is equivalent.

Bibtex Entry

@Article{PhamPR2008,

author = "Pham, T. V. and Smeulders, A. W. M.",

title = "Quadratic Boosting",

journal = "Pattern Recognition",

number = "1",

volume = "41",

pages = "331--341",

year = "2008",

url = "https://ivi.fnwi.uva.nl/isis/publications/2008/PhamPR2008",

pdf = "https://ivi.fnwi.uva.nl/isis/publications/2008/PhamPR2008/PhamPR2008.pdf",

has_image = 1

}