| Best Practices for Learning Video Concept Detectors from Social Media Examples In Multimedia Tools and Applications 2015. [bibtex] [pdf] [url] |

Abstract

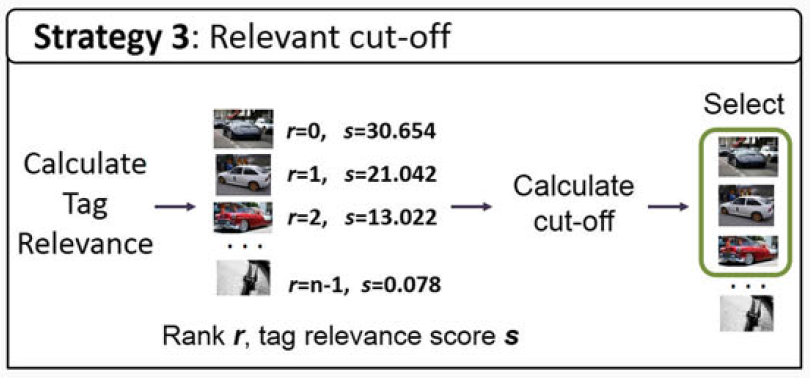

Learning video concept detectors from social media sources, such as Flickr images and YouTube videos, has the potential to address a wide variety of concept queries for video search. While the potential has been recognized by many, and progress on the topic has been impressive, we argue that key questions crucial to know how to learn effective video concept detectors from social media examples? remain open. As an initial attempt to answer these questions, we conduct an experimental study using a video search engine which is capable of learning concept detectors from social media examples, be it socially tagged videos or socially tagged images. Within the video search engine we investigate three strategies for positive example selection, three negative example selection strategies and three learning strategies. The performance is evaluated on the challenging TRECVID 2012 benchmark consisting of 600 h of Internet video. From the experiments we derive four best practices: (1) tagged images are a better source for learning video concepts than tagged videos, (2) selecting tag relevant positive training examples is always beneficial, (3) selecting relevant negative examples is advantageous and should be treated differently for video and image sources, and (4) learning concept detectors with selected relevant training data before learning is better then incorporating the relevance during the learning process. The best practices within our video search engine lead to state-of-the-art performance in the TRECVID 2013 benchmark for concept detection without manually provided annotations.

Bibtex Entry

@Article{KordumovaMTA2015,

author = "Kordumova, S. and Li, X. and Snoek, C. G. M.",

title = "Best Practices for Learning Video Concept Detectors from Social Media Examples",

journal = "Multimedia Tools and Applications",

year = "2015",

url = "https://ivi.fnwi.uva.nl/isis/publications/2015/KordumovaMTA2015",

pdf = "https://ivi.fnwi.uva.nl/isis/publications/2015/KordumovaMTA2015/KordumovaMTA2015.pdf",

has_image = 1

}